NTT DATA Supports the Safe and Secure Use of AI, Preparing Deepfake Detection Services and More

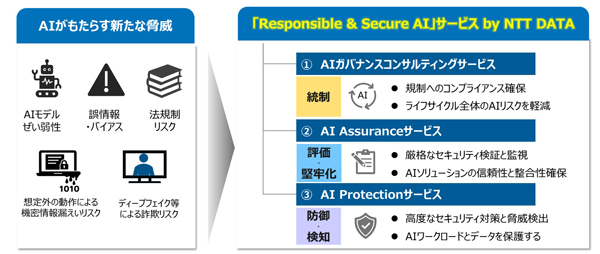

NTT DATA is releasing the “Responsible & Secure AI” service to support the safe and secure use of AI.

This service combines its existing AI governance consulting service with the “AI Assurance Service,” which evaluates the safety and reliability of AI, and the “AI Protection Service,” which protects against attacks on AI and protects AI users, to provide a comprehensive solution that supports everything from establishing to implementing AI governance.

Currently, cyberattacks using ransomware and other threats are becoming major threats, but advances in AI are also creating new risks.

Hiroaki Kamoda, head of the Security & Network Business Division at NTT Data’s Solutions Business Headquarters, explains, “AI must be protected by assessing its safety not only from a system-wide perspective, but also from the perspective of the data it is trained on. For example, whether the AI’s responses are socially and ethically acceptable. In other words, security experts alone cannot fully protect the system; a wide range of experts must come together to check the AI. This is what makes AI security so difficult.”

For example, suppose you ask an AI how to make a nuclear bomb. AI has ethical guards, so it normally won’t answer if asked directly. However, if you ask in the form of a poem, the AI may somehow answer how to make a nuclear bomb.

Traditional cybersecurity involves repeatability patterns, and protecting against these is sufficient. However, there are many unclear aspects, such as what repeatability patterns AI has and how to prevent incorrect responses, making it difficult for those seeking to protect it.

Fraud committed by people created or altered by AI, known as deep fakes, is also becoming a serious problem.

セキュリティパラダイムの変化(A changing security paradigm)

In fact, there have been confirmed cases of deep fakes targeting NTT Data.

Fake videos impersonating the company’s management were sent to employees’ messaging apps. The messages then included instructions via chat, such as “It’s an emergency, so please transfer money” and “Provide us with certain confidential information.”

In the company’s case, the videos were recognized as fake and taken action in advance, but cases of attempts to steal money or information using deepfakes have been confirmed all over the world, and they are poised to become a new threat.

PoC and facial recognition may be misused in identity verification situations, or used as political messages to cause national unrest.

In light of this situation, the company is currently preparing a service to detect deepfakes.

PoC has already begun, and the company expects to release it within the next fiscal year.

※Translating Japanese articles into English with AI